Deep Symbolic Learning (DSL) is a Neuro-Symbolic Integration (NeSy) framework for solving tasks requiring perception and reasoning. Differently from other NeSy systems, which rely on continuous relaxation of logical knowledge, DSL introduces discrete decisions within the model pipeline. This is achieved by DSL trough the usage of reinforcement learning policies in a novel way, discretizing latent internal symbols inside the network, while keeping the architecture trainable end-to-end. Each symbol extracted is associated with a score between zero and one. This score is similar to the expected reward value in reinforcement learning, but in DSL we interpret it using fuzzy logic. This approach allows us to combine the truth values of internal choices to produce the final output's truth value.

Moreover, DSL is the first NeSy system capable of learning both the perception function and the symbolic knowledge in an end-to-end fashion with supervision only for the downstream task and with minimal biases (only the compositional structure is given). For instance, DSL can learn the function that encodes summation in the MNIST Addition task. This means our model is not only capable of interpreting and processing information, but also of learning underlying symbolic representations directly from data. It’s a promising step towards more comprehensive and robust models in the Neuro-Symbolic Integration field.

Results

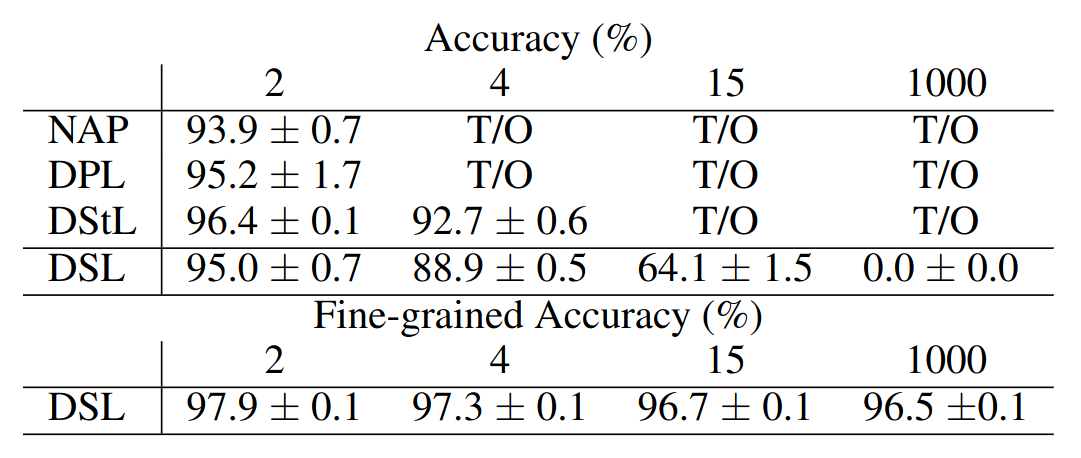

In the following table we report the accuracy on the MNIST MultiDigitSum task at a varying number of digits. For further experiments/analysis, please refer to our paper.

Citation

If you are using DSL in your work, please consider citing our paper:

@article{daniele2022deep,

title={Deep Symbolic Learning: Discovering Symbols and Rules from Perceptions},

author={Daniele, Alessandro and Campari, Tommaso and Malhotra, Sagar and Serafini, Luciano},

journal={arXiv preprint arXiv:2208.11561},

year={2023}

}

Acknowledgements

TC and LS acknowledge the support of the PNRR project FAIR - Future AI Research (PE00000013), under the NRRP MUR program funded by the NextGenerationEU.